Do you want your BlogSpot blog content crawled, indexed and, ranked quickly? If the answer is yes, you are in the right place.

Firstly, let’s find out what is what is crawling and indexing.

What is crawling?

In general terms, crawling involves discovering new or updated pages to add to Google or Bing.

The process is conducted by search engine bots or spiders.

What is indexing?

Indexing simply amounts to processing information found by search engines after crawling and storing the same in an index.

An index is like a big library of web pages that can be retrieved when one performs a search.

The terms crawling and indexing are sometimes used interchangeably although they refer to different things.

Moving away from definitions, more often than not, when you create a new website, it takes time for search engines like Google and Bing to crawl and index your pages.

Nevertheless, by enabling and setting up crawlers and indexing settings from your blogger dashboard the process is enhanced.

Custom Robots.txt

Log in to the Blogger dashboard,

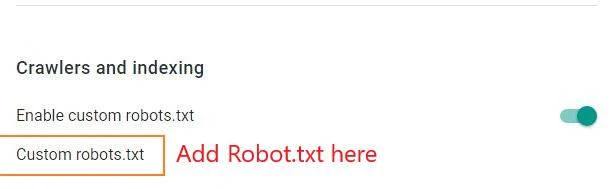

Then go to the blog settings, and then scroll down to the Crawlers and indexing settings.

To begin with, enable the custom robots.txt option.

Click on Custom Robot.txt, then copy and paste the Robot.txt file below.

Replace the Sitemap URL with your domain name.

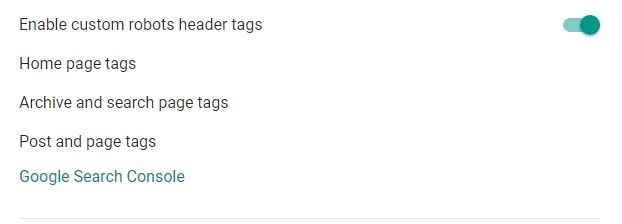

Custom robot header tags

Firstly, enable the custom robot header tags. The homepage tags, archive and search page tags and, post and page tags will be visible.

Homepage Tags

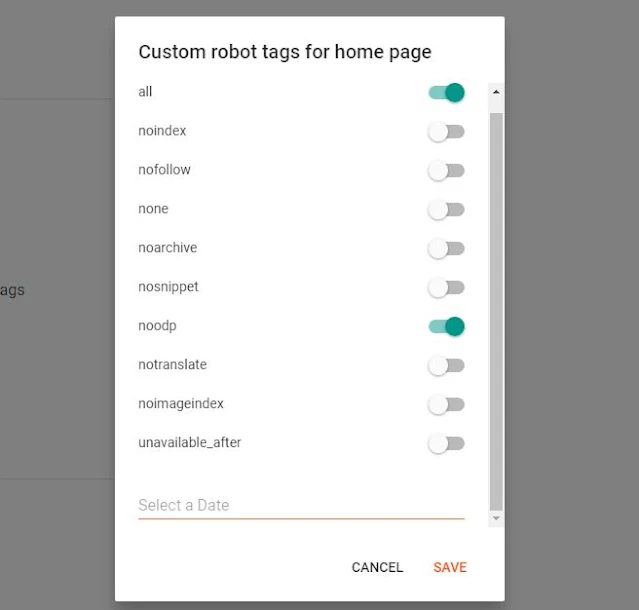

Click on homepage tags and enable all & noodp, then save.

Archive and search page tags

Under archive and search page tags enable noindex & noodp then save.

Post and page tags

In this section enable all & noodp then save.